Scientific Computing, TU Berlin, WS 2019/2020, Lecture 03

Jürgen Fuhrmann, WIAS Berlin

Recap¶

- General info

- Adding packages

- Assignments, simple data types

- Vectors and matrices

- Basic control structures

Concrete types¶

- Every value in Julia has a concrete type

- Concrete types correspond to computer representations of objects

- Inquire type info using

typeof() - One can initialize a variable with an explicitely given fixed type

- Currently posible only in the body of funtions and for return values, not in the global context of Jupyter, REPL

function sometypes()

i::Int8=10

@show i,typeof(i)

x::Float16=5.0

@show x,typeof(x)

z::Complex{Float32}=15+3im

@show z,typeof(z)

return z

end

z1=sometypes()

@show z1,typeof(z1);

Vectors and Matrices have concrete types as well:

function sometypesv()

iv=zeros(Int8, 10)

@show iv,typeof(iv)

xv=[Float16(sin(x)) for x in 0:0.1:1]

@show xv,typeof(xv)

return xv

end

x1=sometypesv()

@show x1,typeof(x1);

Structs allow to define user defined concrete types

struct Color64

r::Float64

g::Float64

b::Float64

end

c=Color64(0.5,0.5,0.1)

@show c,typeof(c);

Types can be parametrized (similar to array)

struct TColor{T}

r::T

g::T

b::T

end

c=TColor{Float16}(0.5,0.5,0.1)

@show c,typeof(c);

Functions, Methods and Multiple Dispatch¶

- Functions can have different variants of their implementation depending on the types of parameters passed to them

- These variants are called methods

- All methods of a function

fcan be listed callingmethods(f) - The act of figuring out which method of a function to call depending on the type of parameters is called multiple dispatch

function test_dispatch(x::Float64)

println("dispatch: Float64, x=$(x)")

end

function test_dispatch(i::Int64)

println("dispatch: Int64, i=$(i)")

end

test_dispatch(1.0)

test_dispatch(10)

methods(test_dispatch)

- Typically, Julia functions have lots of possible methods

- Each method is compiled to different machine code and can be optimized for the particular parameter types

using LinearAlgebra

methods(det)

The function/method concept somehow corresponds to C++14 generic lambdas

auto myfunc=[](auto &y, auto &y)

{

y=sin(x);

};is equivalent to

function myfunc!(y,x)

y=sin(x)

end

Many generic programming approaches possible in C++ also work in Julia,

If not specified otherwise via parameter types, Julia functions are generic: "automatic auto"

Abstract types¶

- Abstract types label concepts which work for a several concrete types without regard to their memory layout etc.

- All variables with concrete types corresponding to a given abstract type (must) share a common interface

- A common interface consists of a set of methods working for all types exhibiting this interface

- The functionality of an abstract type is implicitely characterized by the methods working on it

- "duck typing": use the "duck test" — "If it walks like a duck and it quacks like a duck, then it must be a duck" — to determine if an object can be used for a particular purpose

Examples of abstract types

function sometypesa()

i::Integer=10

@show i,typeof(i)

x::Real=5.0

@show x,typeof(x)

z::Any=15+3im

@show z,typeof(z)

return z

end

sometypesa()

Though we try to force the variables to have an abstract type, they end up with having a conrete type which is compatible with the abstract type

The type tree¶

- Types can have subtypes and a supertype

- Concrete types are the leaves of the resulting type tree

- Supertypes are necessarily abstract

- There is only one supertype for every (abstract or concrete) type:

supertype(Float64)

- Abstract types can have several subtypes

using InteractiveUtils

subtypes(AbstractFloat)

- Concrete types have no subtypes

subtypes(Float64)

- "Any" is the root of the type tree and has itself as supertype

supertype(Any)

Walking the the type tree

function showtypetree(T, level=0)

println(" " ^ level, T)

for t in subtypes(T)

showtypetree(t, level+1)

end

end

showtypetree(Number)

We can have a nicer walk through the type tree

by implementing an interface method AbstractTrees.children for types:

using Pkg

Pkg.add("AbstractTrees")

using AbstractTrees

AbstractTrees.children(x::Type) = subtypes(x)

AbstractTrees.print_tree(Number)

Abstract types are used to dispatch between methods as well

function test_dispatch(x::AbstractFloat)

println("dispatch: $(typeof(x)) <:AbstractFloat, x=$(x)")

end

function test_dispatch(i::Integer)

println("dispatch: $(typeof(i)) <:Integer, i=$(i)")

end

test_dispatch(one(Float16))

test_dispatch(10)

methods(test_dispatch)

Now, depending on the input type for test_dispatch, a generic or a specific method is called

Testing of type relationships

@show Float64<: AbstractFloat

@show Float64<: Integer

@show Int16<: AbstractFloat;

The power of multiple dispatch¶

- Multiple dispatch is one of the defining features of Julia

- Combined with the the hierarchical type system it allows for powerful generic program design

- New datatypes (different kinds of numbers, differently stored arrays/matrices) work with existing code once they implement the same interface as existent ones.

- In some respects C++ comes close to it, but for the price of more and less obvious code

Just-in-time compilation and Performance¶

- Just-in-time compilation is another feature setting Julia apart

- Use the tools from the The LLVM Compiler Infrastructure Project to organize on-the-fly compilation of Julia code to machine code

- Tradeoff: startup time for code execution in interactive situations

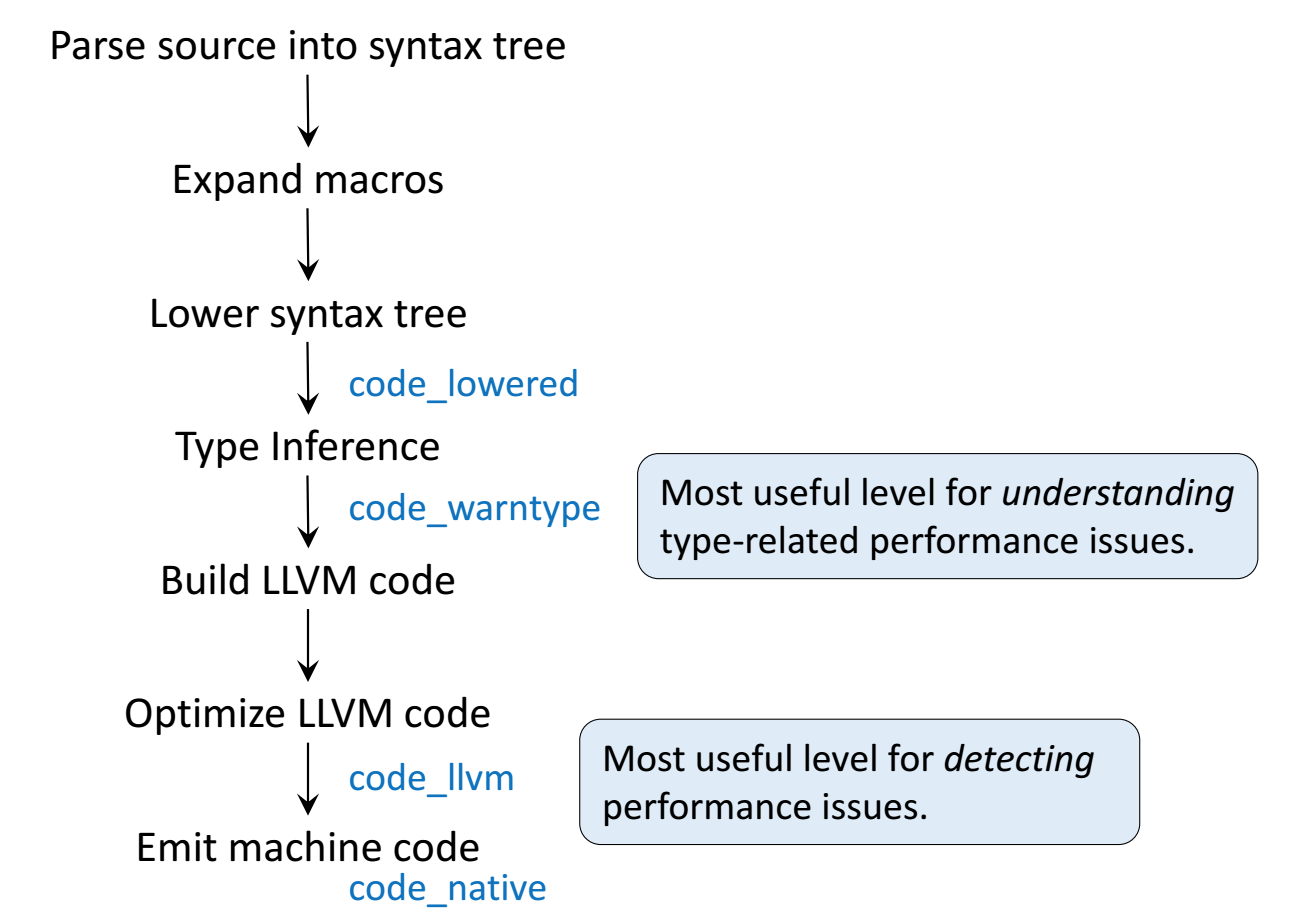

- Multiple steps: Parse the code, analyze data types etc.

- Intermediate results can be inspected using a number of macros

From [Introduction to Writing High Performance Julia](https://docs.google.com/viewer?a=v&pid=sites&srcid=ZGVmYXVsdGRvbWFpbnxibG9uem9uaWNzfGd4OjMwZjI2YTYzNDNmY2UzMmE) by D. Robinson

Inspecting the code transformation¶

Define a function

g(x)=x+x

@show g(2)

methods(g)

Parse into abstract syntax tree

@code_lowered g(2)

println("-------------------------------------")

@code_lowered g(2.0)

Type inference according to input

@code_warntype g(2)

println("-------------------------------------")

@code_warntype g(2.0)

LLVM Bytecode

@code_llvm g(2)

println("-------------------------------------")

@code_llvm g(2.0)

Native assembler code

@code_native g(2)

println("-------------------------------------")

@code_native g(2.0)

Performance¶

Macros for performance testing:

- @elapsed: wall clock time used

- @allocated: number of allocations

- @time: @elapsed and @allocated together

- @benchmark: Benchmarking small pieces of code

Time twice in order to skip compilation time¶

function ftest(v::AbstractVector)

result=0

for i=1:length(v)

result=result+v[i]^2

end

return result

end

@time ftest(ones(Float64,100000))

@time ftest(ones(Float64,100000))

Run for a different type

@time ftest(ones(Int64,100000))

@time ftest(ones(Int64,100000))

Julia performace gotchas:¶

- Variables changing types

- Type change assumed to be always possible in global context (outside of a function)

- Type change due to inconsequential programming

- Memory allocations for intermediate results

Performance in global context¶

As an exception, for this example we use the CPUTime package which works without macro expansion.

Pkg.add("CPUTime")

using CPUTime

Declare a long vector

myvec=ones(Float64,1000000);

Sum up its values

CPUtic()

begin

x=0.0

for i=1:length(myvec)

global x

x=x+myvec[i]

end

@show x

end

CPUtoc();

Alternatively, put the sum into a function

function mysum(v)

x=0.0

for i=1:length(v)

x=x+v[i]

end

return x

end

Run again

CPUtic()

begin

@show mysum(myvec)

end

CPUtoc();

What happened ?¶

Julia Gotcha #1: The REPL (terminal) is the Global Scope.

- So is the Jupyter notebook

- Julia is unable to dispatch on variable types in the global scope as they can change their type anytime

- In the global context it has to put all variables into "boxes" allowing to dispatch on ther type at runtime

- Avoid this situation by always wrapping your critical code into functions

Type stability¶

Use @benchmark for testing small functions

Pkg.add("BenchmarkTools")

using BenchmarkTools

function g()

x=1

for i = 1:10

x = x/2

end

return x

end

@benchmark g()

function h()

x=1.0

for i = 1:10

x = x/2

end

return x

end

@benchmark h()

@code_native g()

@code_native h()

Once again, "boxing" occurs to handle x: in g() it changes its type from Int64 to Float64:

@code_warntype g()

@code_warntype h()

So, when in doubt, explicitely declare types of variables

Structuring your code: modules, files and packages¶

- Complex code is split up into several files

- Avoid name clashes for code from different places

- Organize the way to use third party code

Modules¶

- Modules allow to encapsulate implementation into different namespaces

module TestModule

function mtest(x)

println("mtest: x=$(x)")

end

export mtest

end

- Module content can be accesse via qualified names

TestModule.mtest(13)

- "using" makes all exported content of a module available without prefixing

- The '.' before the module name refers to local modules defined in the same file

using .TestModule

mtest(23)

Finding modules in the file system¶

- Put single file modules having the same name as the module

into a directory which in on the

LOAD_PATH - Call "using" or "import" with the module

- You can modify your

LOAD_PATHby adding e.g. the actual directory

push!(LOAD_PATH, pwd())

Do this e.g. in the startup file .julia/config/startup.jl

- Create a module in the file system (normally, use your editor...)

(yes, we can have multiline strings and

"in them with"""...)

open("TestModule1.jl", "w") do io

write(io, """

module TestModule1

function mtest1(x)

println("mtest1: x=",x)

end

export mtest1

end

""")

end

- Import, enabling qualified access

using TestModule1

TestModule1.mtest1(23)

- Import, enabling unqualified access of

using TestModule1

mtest1(23)

Packages in the file system¶

- Packages are found via the same mechanism

- Part of the load path are the directory with downloaded packages and the directory with packages under development

- Each package is a directory named

Packagewith a subdirectorysrc - The file

Package/src/Package.jldefines a module namedPackage - More structures in a package:

- Documentation build recipes

- Test code

- Dependency description

- UUID (Universal unique identifier)

- Default packages (e.g. the package manager Pkg) are always available

- Use the package manager to checkout a new package via the registry

Including code from files¶

- The include statement allows just to include the code in a given file

open("myfile.jl", "w") do io

write(io, """myfiletest(x)=println("myfiletest: x=",x)""")

end

include("myfile.jl")

myfiletest(23)

How to return homework¶

- For homework assignements I want you to write single file modules with a standard structure

module MyHomework

function main(;optional_parameter)

println("Hello World")

end

end

This notebook was generated using Literate.jl.